6. Load Invoices Historical Data

Invoices - API Documentation

In the last article, we had set up a data pipeline for invoices, which adds one day's invoicing data in each pipeline run, starting from the day the pipeline first ran. This article details the steps to load historical invoice data for analysis and reporting.

Pipeline Configuration

Execution Schedule: Run it only once and load the data for 2022 and 2023.

Data load strategy: Fetch all Invoices records that have been created or modified between Jan 1, 2022 and Dec 31, 2023. Historical data load follows the same data dedupe and load strategy as the Invoices pipeline in the previous article, and therefore uses the same pipeline. No new pipeline needs to be written to run a historical data load.

Data schema management: The Invoices API response may have evolved over time, with records from 2022 missing some fields as compared to 2023. Schema evolution is handled automatically.

Data storage:

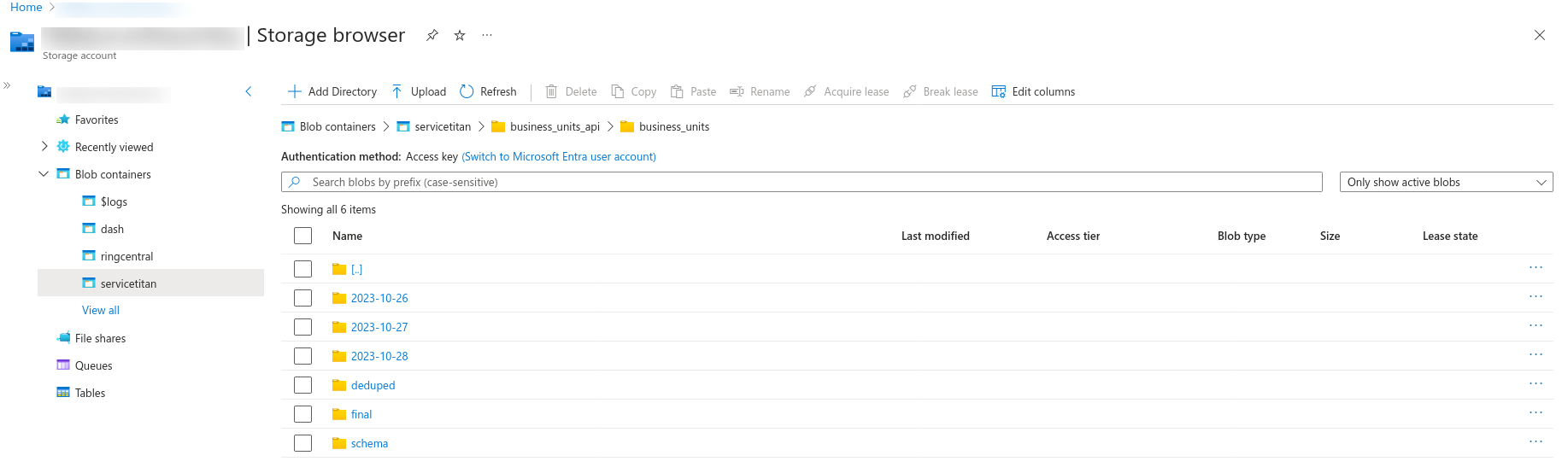

Azure Blob - In the Azure blob create a folder for Invoices. Within Invoices folder create a folder for the historical data load.

Azure SQL - Write data into the 'invoices' table in the 'servicetitan' schema.

Historical Load Setup

The Invoices pipeline takes the 'modifiedOn' field as a parameter, with the value provided to the pipeline using a variable called 'yesterday'. We will use the same variable to load historical data for 2022 and 2023.

If the variable has a value of 01-01-2022, then the pipeline will load data for Jan 1, 2022. If the variable value is 02-01-2022, then the pipeline will load data for Jan 2, 2022, and so on. By submitting one pipeline for each date from Jan 1, 2022 to Dec 31, 2023, we can load the required historical data.

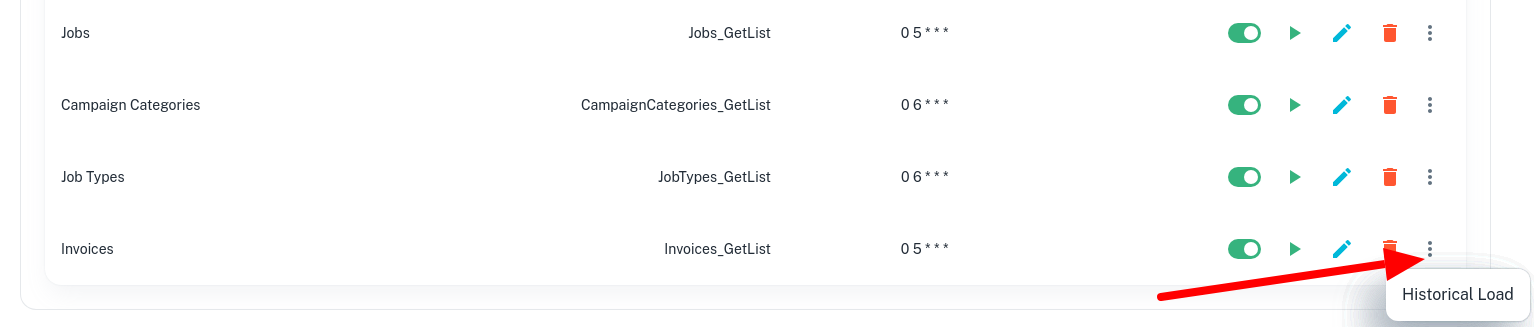

- Go to Integrations -> {{Your integration}} -> Invoices -> ellipsis (three dots) to the right of the Delete icon.

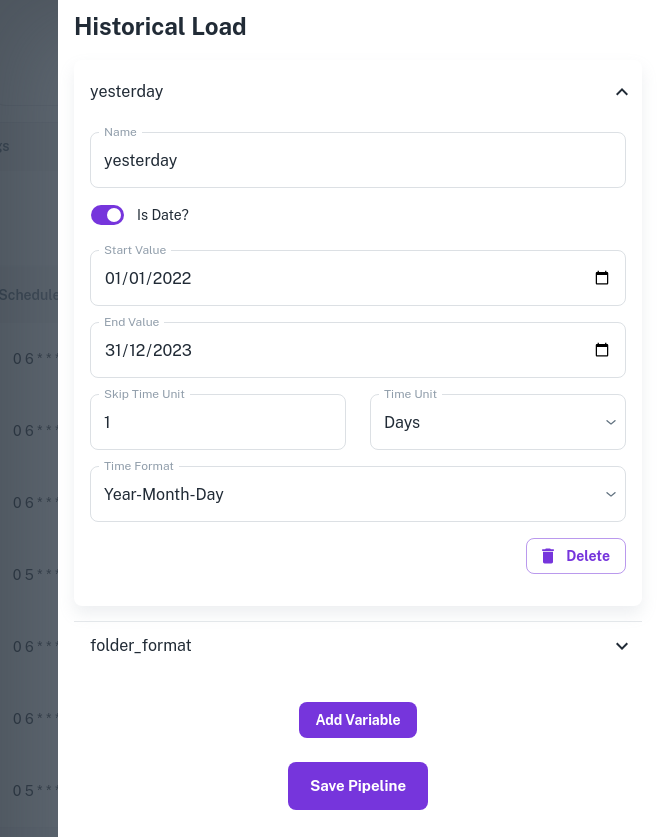

- A new blade opens, listing the two variables in the Invoices pipeline - 'yesterday' and 'folder_format'.

Select the 'yesterday' variable and fill in the values as shown below.

Skip Time Unit is 1 day. This means that DataStori will submit one pipeline run for each of dates between Jan 1, 2022 (Start Value) and Dec 31, 2023 (End Value), which is a total of 730 (= 365 days x 2 years) pipeline runs.

Ignore the 'folder_format' variable or specify a pattern if you want a separate folder nomenclature for historical data load.

Save the pipeline, and DataStori will run the Invoices pipeline for the 730 dates in 2022 and 2023.

The 730 pipeline runs will execute sequentially to avoid any race conditions between different pipeline runs acting on the same data.

The dedupe logic and the destination are picked up from the Invoices pipeline configuration.

Historical loads are usually long running processes. Ensure that you allocate enough time and capacity while running historical loads.